Despite the fact that four billion users are on the Internet, three billion people in rural and developing areas still have no access to the Internet [1]. Due to the lower population density and the lack of infrastructure, the return of investment in these areas does not compare to cities. In our lab, the Information and Communication Technology for Development (ICTD) lab at the University of Washington, we expand Internet access by developing a small-scale LTE core network that the communities can deploy and maintain by themselves. The project is called Community LTE Networks, or CoLTE for short.

I have been working on this project for over a year now. During that time, we deployed CoLTE in two places: Bokondini, Indonesia and Oaxaca, Mexico. In January this year, I was a part of an awesome team of three that deployed the network in Oaxaca. However, unexpected scenarios always happen during a field deployment. In this blog post, I would like to share the experience of how we handled those situations. First, I will discuss how CoLTE works, followed by why Oaxaca was our site, how we set up the network, and what we learned from this field trip.

How CoLTE Works

With an Internet connection, a computer to run the EPC, and a base station, we can set up a small LTE network anywhere! The EPC is the network core that manages everything from network subscribers, packet forwarding, to mobility management [3]. At the ICTD lab, we wrote a lot of the code to operate the EPC. In the figure below, you can see that the EPC is connected to the rest of the Internet via a connection on the left side. The connection links this small town to the rest of the world, and could be a satellite, wireless link, or fiber-optic cable. To the right of the EPC is the base station (eNodeB). The base station sends out the LTE radio signal, and mobile phones in the network connect to it.

A CoLTE network comprised of an Internet connection, EPC, and eNodeB

Why Oaxaca?

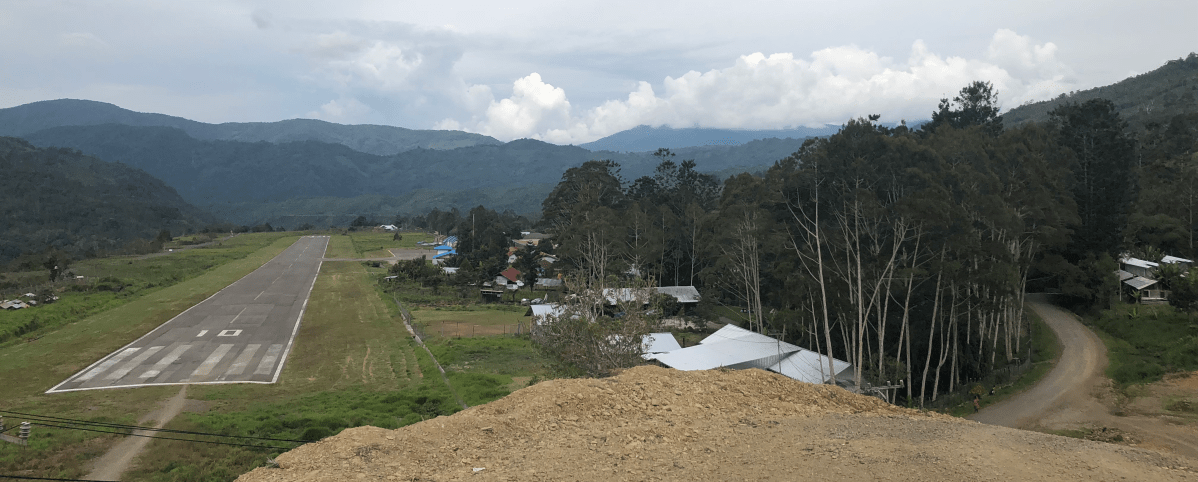

Before jumping into the details of the deployment, I will discuss why we chose to go to Oaxaca. Oaxaca is a state in the southeastern part of Mexico, and is known for its well-preserved culture and Indigenous people [2]. Because the surrounding area is mountainous, each community is separated by natural barriers which make it difficult to build an extensive mobile network infrastructure. This makes the area a great fit for community network research. Telecomunicaciones Indígenas Comunitarias (TIC) is an organization based in Oaxaca City that works to connect Indigenous communities in and around the Oaxaca area. TIC has already deployed several 2G networks, but just recently became more interested in LTE. Thus, our lab came to Oaxaca to collaborate with TIC on this project to deploy an LTE network in a community outside Oaxaca.

How did we set up CoLTE in Oaxaca?

There were a total of three people on this trip: Spencer, Esther, and I. Spencer is a postdoctoral researcher who has been leading this project from the beginning, and Esther is a Ph.D. student in our lab. While I spent only a week in Oaxaca due to academic constraints, Spencer and Esther spent two weeks.

Before we left Washington for Oaxaca, we prepared and configured the equipment, including mini-PCs with the EPC installed. To make travel easier, the eNodeBs (base stations), had been shipped directly to TIC’s office in Oaxaca city before we arrived. One was manufactured by BaiCells, and the other one by Nokia. We expected that we could easily configure them to connect to our EPC at the TIC office in the first few days, then we would go to the site to install them. Unfortunately, things did not go as planned.

Spencer had setup a CoLTE network with a BaiCells eNodeB before, so we thought this should cause us no problems. However, it turned out that the equipment was a different version from the one that Spencer had worked with. Accidentally, we misconfigured the settings of the eNodeB, so we were locked out and no longer had access to the eNodeB settings. The only way to connect to it again was via the optical fiber port. Unfortunately, we did not have an optical fiber cable, so we ordered one from the source that would deliver it to us the fastest.

We did not want to waste our time, so while we waited for the fiber optic to ethernet converter, we attempted to work with the Nokia eNodeB. We followed the instructions in the manual, but it did not start up. We reached out to Nokia support immediately as they were the best people to help, but because we were in different timezones, email was not the best mode of communication. Soon, Spencer set up a phone call, so we could communicate effectively.

As the converter arrived, we agreed that we had a better chance of getting BaiCells eNodeB to work, so we came back to work on it. After two days, we were ready to install the network! Sadly, I had to fly back to Seattle on the next day, so I did not get the opportunity to install the components on site with Spencer and Esther.

Conclusion

Field deployments do not always go as planned. Although we had prepared our EPC in advance and were certain of how to connect to the eNodeB, something unexpected could always come up. Time constraint is a big part of field deployment, so adjust your plan accordingly to what happens and do what you think is the best in that situation. In the next deployment, we would definitely check the devices that we will work with, simulate the set up of the network, and test it in our lab if possible. Although I did not have the opportunity to go to the site, I am really grateful to be a part of this team and this effort to increase the Internet accessibility all around the world.

References

[1] International Telecommunication Union. (2017). ICT Facts and Figures 2017. Geneva, Switzerland

[2] Martin, G. J., Camacho Benavides, C. I., Del Campo García, C. A., Anta Fonseca, S., Chapela Mendoza, F., & González Ortíz, M. A. (2011). Indigenous and community conserved areas in Oaxaca, Mexico. Management of Environmental Quality: An International Journal, 22(2), 250-266.

[3] Sevilla, S., Kosakanchit, P., Johnson, M., & Heimerl, K. (2018, November). Building Community LTE Networks with CoLTE. The Community Network Manual: How to Build the Internet Yourself, 75-102.